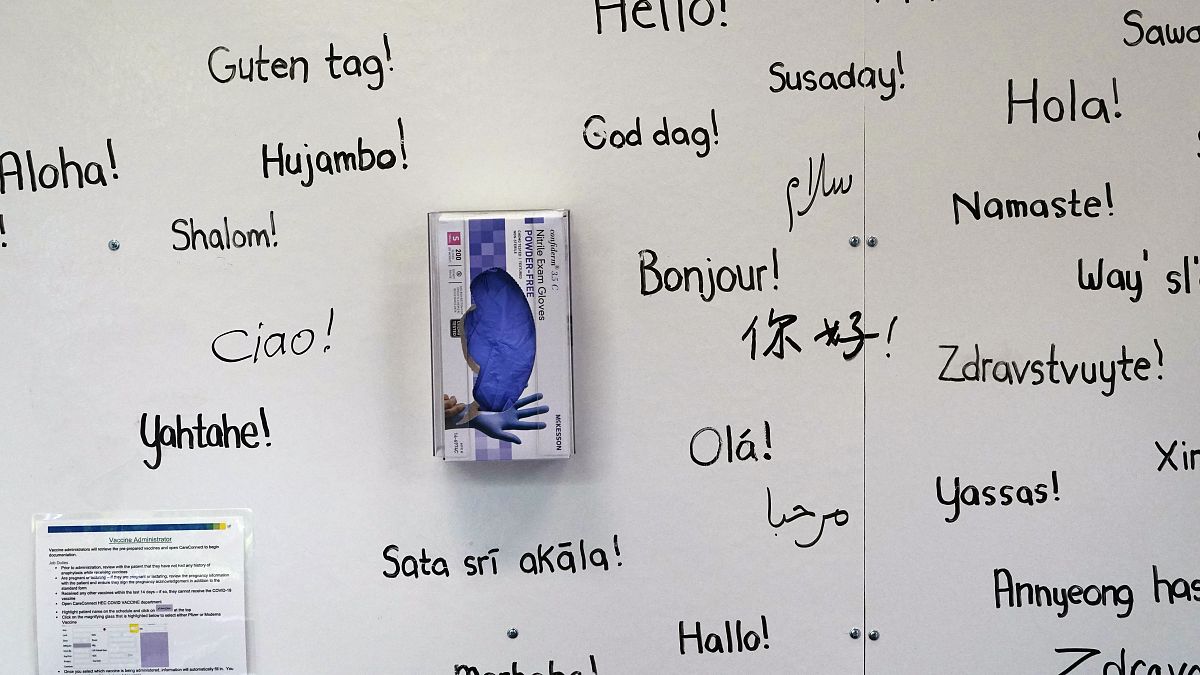

It will soon be easier to see Facebook and Instagram posts in lesser-spoken global languages, but an expert suggests that to improve the tool Meta should talk to native speakers.

It will soon be easier to see Facebook and Instagram posts in 200 lesser-spoken languages around the world.

Meta’s No Language Left Behind (NLLB) project announced in a paper published this month that they’ve scaled their original technology.

The project includes a dozen “low resource” European languages, like Scottish Gaelic, Galician, Irish, Lingurian, Bosnian, Icelandic and Welsh.

According to Meta, that’s a language that has less than one million sentences in data that can be used.

Experts say that to improve the service, Meta should consult with native speakers and language specialists as the tool still needs work.

How does the project work

Meta trains its artificial intelligence (AI) with data from the Opus repository, an open source platform with a collection of authentic text of speech or writing for various languages that can program machine learning.

Contributors to the dataset are experts in natural language processing (NLP): the subset of AI research that gives computers the ability to translate and understand human language.

Meta said they also use a combination of mined data from sources like Wikipedia in their databases.

The data is used to create what Meta calls a multilingual language model (MLM), where the AI can translate “between any pair … of languages without relying on English data,” according to their website.

The NLLB team evaluates the quality of their translations with a benchmark of human-translated sentences they’ve created that is also open source. This includes a list of “toxicity” words or phrases that humans can teach the software to filter out when translating text.

According to their latest paper, the NLLB team improved the accuracy of translations by 44 per cent from their first model, which was released in 2020.

When the technology is fully implemented, Meta estimates there will be more than 25 billion translations every day on Facebook News Feed, Instagram and other platforms.

‘Talk to the people’

William Lamb, professor of Gaelic ethnology and linguistics at the University of Edinburgh, is an expert in Scottish Gaelic, one of the low-resource languages identified by Meta in its NLLB project.

About 2.5 per cent of Scotland’s population, roughly 130,000 people, told the 2022 census that they have some skills in the 13th-century Celtic language.

There are also roughly 2,000 Gaelic speakers in eastern Canada, where it is a minority language. UNESCO classifies the language as “threatened” by extinction because of how few people speak it regularly.

Lamb noted that Meta’s translations in Scottish Gaelic are “not very good yet,” because of the crowdsourced data they’re using, despite their "heart being in the right place".

“What they should do … if they really want to improve the translation is to talk to the people, the native Gaelic speakers that still live and breathe the language,” Lamb said.

That’s easier said than done, Lamb continued. Most of the native speakers are in their 70s and do not use computers, and the young speakers "use Gaelic habitually not in the way their grandparents do".

A good replacement would be for Meta to strike a licensing agreement with the BBC, who work to preserve the language by creating high-quality, online content in it.

‘This needs to be done by specialists’

Alberto Bugarín-Diz, professor of AI at the University of Santiago de Compostela in Spain, believes linguists like Lamb should work with Big Tech companies to refine the data sets available to them.

“This needs to be done by specialists who can revise the texts, correct them and update them with metadata that we could use,” Bugarin-Diz said.

“People from humanities and from a technical background like engineers need to work together, it’s a real need,” he added.

There is an advantage for Meta in using Wikipedia, Bugarin-Diz continued, because the data would reflect “almost every aspect of human life,” meaning that the quality of the language could be much better than just using more formal texts.

But, Bugarin-Diz suggests Meta and other AI companies take the time to look for quality data online and then go through the legal requirements necessary to use it, without breaking intellectual property laws.

Lamb, meanwhile, said he won’t recommend that people use it due to errors in the data unless Meta makes some changes in their dataset.

“I wouldn’t say their translation abilities are at the point where the tools are actually useful,” Lamb said.

“I wouldn’t encourage anybody as reliable language tools yet; I think they would be upfront in saying that too.”

Bugarín-Diz takes a different stance.

He believes that, if no one uses the Meta translations, they “will not be willing” to invest time and resources into improving them.

Like other AI tools, Bugarin-Diz believes it's a matter of knowing the weaknesses of the technology before using it.