Researchers say a new AI model may be more generalisable for cancer diagnosis and evaluation than existing deep learning methods.

Scientists have designed a new artificial intelligence (AI) model that may be able to diagnose and evaluate multiple types of cancer.

The new model called the Clinical Histopathology Imaging Evaluation Foundation (CHIEF) was up to 36 per cent more effective at detecting cancer, determining a tumour’s origin, and predicting patient outcomes than other deep learning models, researchers said.

The team led by Harvard Medical School researchers wanted the model to be more widely applicable across different diagnostic tasks as many current deep learning models for cancer are trained to perform specific functions.

“Unlike existing methods, our AI tool provides clinicians with accurate, real-time second opinions on cancer diagnoses by considering a broad spectrum of cancer types and variations,” Kun-Hsing Yu, assistant professor of biomedical informatics at Harvard Medical School and senior author of the study, told Euronews Health in an email.

How does CHIEF work?

The model was trained on more than 15 million different pathology images, “which enhances its reliability in diagnosing cancers with atypical features,” Yu added.

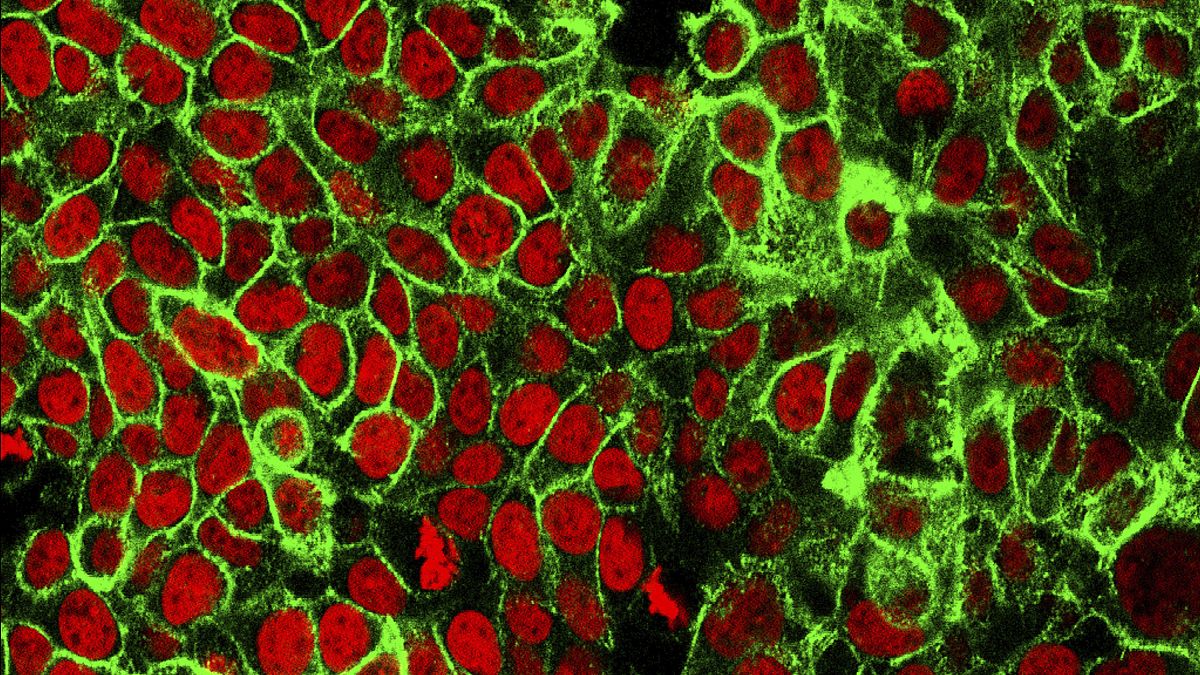

They then used more than 60,000 high-resolution images of tissue slides “to further develop our AI model and fine-tune it for specific genetic and clinical prediction tasks”.

The researchers tested their model on more than 19,400 images from 24 hospitals and patient cohorts globally and published the findings in the journal Nature on Wednesday.

The team said the model works by reading digital slides of tumour tissues and can predict their molecular profile based on the features in the image. It can also identify features of a tumour that relate to how a patient may respond to treatment.

It achieved nearly 94 per cent accuracy in detecting cancer cells across 11 cancer types based on a metric for model performance.

“In certain applications, such as identifying colon cancer cells or predicting genetic mutations, our model's performance reached up to 99.43 per cent,” Yu said.

The researchers hope the AI model will help clinicians to more accurately evaluate a patient’s tumour.

The new model “represents a promising advancement” in applying AI to oncology, according to Ajit Goenka, a professor of radiology at Mayo Clinic in the US, who was not involved in the study.

He told Euronews Health in an email that it may “streamline preliminary diagnostic evaluations” and provide pathologists with a tool that analyses slides to “highlight critical areas for further examination”.

“Despite these capabilities, CHIEF's robustness across diverse clinical environments remains to be rigorously tested, and the potential for biases arising from its training on large, possibly non-representative datasets cannot be ignored,” said Goenka, who researchers using AI to improve pancreatic cancer diagnosis.

What comes next for the AI model?

The remaining step before CHIEF is used in doctors’ offices is to receive regulatory approval.

“To accomplish this, we are launching a prospective clinical study to validate the CHIEF model in real-world clinical settings,” said Yu, who added that they are also working to extend its capability to detect rare cancers.

Goenka added that to be used clinically the model will need “extensive validation in real-world settings, encompassing diverse patient demographics and varying clinical conditions”.

“This validation is crucial to ensure the model's performance is not only theoretically superior but also practically reliable in everyday clinical practice,” he said.